Distinction From The Hessian of The Entropy

In certain cases, the Fisher Information matrix is the negative of the Hessian of the Shannon entropy. The cases where this explicitly holds is given below. A distribution's Shannon entropy

has as the negative of the entry of its Hessian:

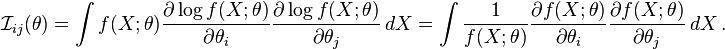

In contrast, the entry of the Fisher information matrix is

The difference between the negative Hessian and the Fisher information is

This extra term goes away if, instead, one considers the Hessian of the relative entropy instead of the Shannon entropy.

Read more about this topic: Fisher Information

Famous quotes containing the words distinction and/or entropy:

“We mustn’t be stiff and stand-off, you know. We must be thoroughly democratic, and patronize everybody without distinction of class.”

—George Bernard Shaw (1856–1950)

“Just as the constant increase of entropy is the basic law of the universe, so it is the basic law of life to be ever more highly structured and to struggle against entropy.”

—Václav Havel (b. 1936)